London Calling: Two Venues, 16 Teams, One Remarkable Evening for Merlin Alchemy

By John Tredennick

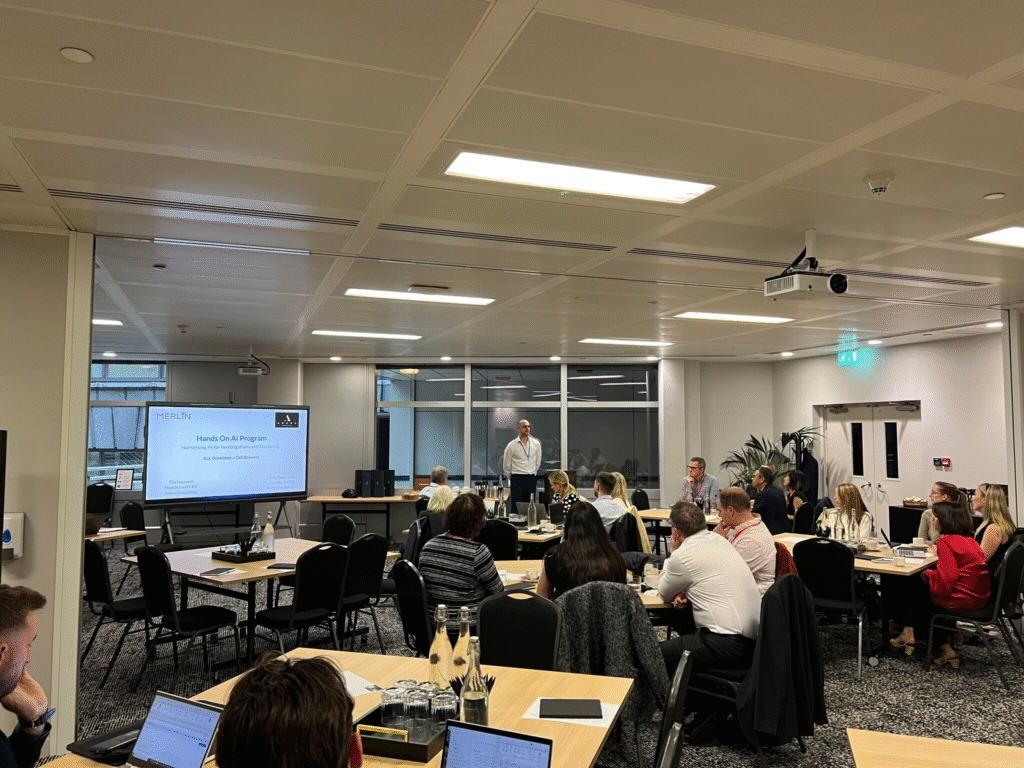

Last week, we accomplished something unprecedented: running two simultaneous hands-on AI programs across London on the same night. One sponsored by HKA Global, the other by Arkus Consulting. Different venues, different case materials, but the same transformative experience for 16 teams—the largest programs we’ve ever conducted.

At HKA, teams investigated the BP Deepwater Horizon oil spill trial alongside Darren Mullins, HKA’s global investigations partner. Across town at Arkus, teams tackled the UK Post Office Horizon scandal with Tim Harrison, founder and CEO of Arkus Consulting. Each team faced a different complex legal topic and had just 45 minutes to analyze 15,000 documents they’d never seen before, using Alchemy for the first time.

The results exceeded every expectation.

What Happened

As teams presented their findings, something remarkable happened. Each report was more detailed than the last. Teams that had never touched the documents—never used our platform—were delivering sophisticated legal analysis complete with citations, contradictions in testimony, and nuanced conclusions.

The consistent reaction: amazement that they could master such complex material in under an hour.

Then Came the Last Team at Arkus

They drew this question: “Did BP’s onsite team pressure operators to start the well before quality control was complete? If so, who at BP pushed the deadline, and which operators were pressured?”

The team reported their frustration. “We kept getting unsatisfactory answers from Alchemy. We tried different prompts, rephrased the question multiple ways. Every time, we got essentially the same response which we were convinced was wrong.”

The Teaching Moment

Here’s what happened next.

When the team finally reviewed Alchemy’s responses carefully—really read what the AI was telling them—they realized something crucial: Alchemy had been giving them correct answers all along.

The system wasn’t being evasive or malfunctioning. It was being accurate.

Alchemy had synthesized thousands of pages to tell them exactly what the evidence showed—not what they expected it to show.

Why This Matters

This moment captures something essential about AI in legal work. The technology isn’t there to tell you what you want to hear. It’s there to tell you what the evidence actually says, even when—especially when—that’s more nuanced than expected.

The team’s experience reflects a fundamental shift happening in legal practice. We’re moving from “finding documents that support our theory” to “understanding what the complete evidence actually shows.”

That requires trusting technology that’s often more thorough and accurate than we expect.

Both London programs demonstrated that legal teams can quickly master sophisticated AI-driven analysis. But they also revealed something deeper: the real learning curve isn’t technical—it’s psychological. It’s learning to trust accuracy over expectation.

About the Author

John Tredennick (jt@merlin.tech) is the CEO and Founder of Merlin Search Technologies, a software company leveraging generative AI and cloud technologies to make investigation and discovery workflow faster, easier, and less expensive. Prior to founding Merlin, Tredennick had a distinguished career as a trial lawyer and litigation partner at a national law firm.

With his expertise in legal technology, he founded Catalyst in 2000, an international ediscovery technology company that was acquired in 2019 by a large public company. Tredennick regularly speaks and writes on legal technology and AI topics, and has authored eight books and dozens of articles. He has also served as Chair of the ABA’s Law Practice Management Section.