Are LLMs Like GPT Secure? Or Do I Risk Waiving Attorney-Client or Work-Product Privileges?

Note: This article was published in Law360 on August 17, 2023.

By John Tredennick and Dr. William Webber

In our discussions and webinars about our work with GPT and other large language models, we regularly hear concerns about security. Are we risking a waiver of attorney-client or work-product privileges by sending our data to OpenAI? What if that data contains confidential client information or documents needed by GPT before it can do its analysis?

Our answer is uniformly: No, you’re not taking a risk, at least not if you are using a commercial license for the service. Microsoft and the other major large language model providers include solid non-disclosure and non-use provisions in their commercial contracts. They are easily as strong as the ones included in your Office 365 licenses. They provide the same reasonable expectation of privacy you have when you store email and office files in Azure or AWS.

In our view, the security risk when you send prompts to GPT in Azure is no greater than hosting email and office files on Azure. Here is why.

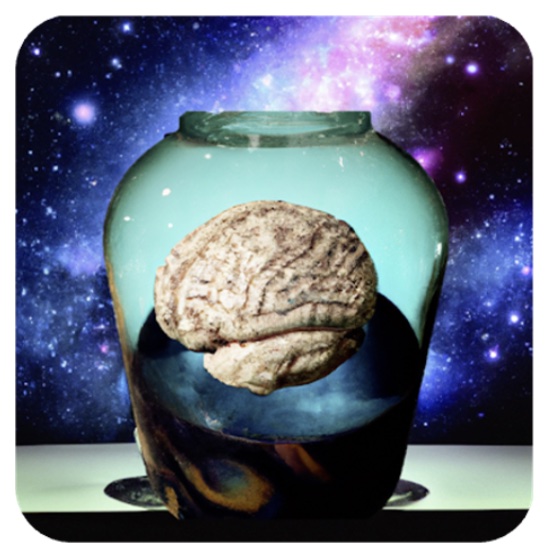

A Brain in a Jar?

Let’s start with GPT itself. Early on, people feared that GPT would learn from and possibly repeat to others the information you provided in a prompt (i.e. the question or other information you sent to GPT). That is not the case.

While large language models like GPT are trained on vast amounts of information gleaned from the Internet, they can’t absorb new information sent via your prompt. It is a matter of its architecture. Its knowledge is based solely on its prior training.

This is why GPT can’t answer questions about events occurring after its training cutoff–September 2021. Ask it which party did better in the 2022 midterm elections and you will get a response like this:

“I’m sorry, but as of my training cut-off in September 2021, I don’t have information on events or data past that point. Therefore, I cannot provide results for the 2022 midterm elections. Please consult a reliable and up-to-date source for this information.”

In that regard, GPT is like a “brain in a jar.” The brain consists of a massive amount of pretraining (that is what the P stands for in GPT) powered by lots of servers running expensive GPU chips. The knowledge base was set at the time of the training cutoff. This brain cannot learn from any of the information you submit in your prompts, nor can it remember anything it told you in response.

So, let’s start by specifically answering two questions that everyone seems to be asking:

- Can GPT learn from and remember the information or data I send to it in a prompt?

No, it cannot. It does not have the ability to learn from your prompts or even remember them. - Can GPT intentionally or even inadvertently share information I send in a prompt to other users?

No, it cannot. It does not remember anything sent in a prompt beyond creating its answer (as we discuss below). As a result, there is absolutely no danger of it sharing information you send to another user.

If All That Is True, How Does ChatGPT Remember Our Conversations?

One of the most fascinating parts about ChatGPT (the web front end that originally caught everyone’s attention) is its ability to engage in extended conversations with users. If GPT has no memory, how can ChatGPT remember our conversations?

The simple answer is this. Behind the scenes, OpenAI saves your conversations and resends as much of that text as possible with the new prompt. That is also the reason ChatGPT users can return to earlier conversations and pick up where they left off.

We will tell you more about this process a bit further in the article because we and others use this technique as well.

Ok, Then How Does GPT Answer My Questions?

That is the next logical question. If GPT has no memory and can’t learn from my questions, how can it answer them? That is the purpose of what we call a “context window.”

What is a context window? It is where the large language model thinks. It is the place where you input your files for analysis. It is the place where the large language model submits its answers.

A context window is like RAM memory or a whiteboard that stands between the large language model (brain) and your prompt. Prompts you send go on the whiteboard, which the large language model can read. When GPT or another large language model responds, it sends its analysis to the whiteboard, which can be relayed to you. If we want the large language model to analyze our discovery documents, they must be sent to the whiteboard before the large language model can read and analyze them.

Owing to cost and computational complexity, early large language models offered a context window of 2,000 tokens (about 1,500 words) or less. You can imagine the problem that was created. You could send an email or two to the large language model for analysis but not much more. Many discovery documents are larger than 1,500 words.

As it came out with new versions, OpenAI increased the size of its context window to 4,000 tokens, then 8,000, 16,000, and finally to 32,000 tokens with the most expensive version of GPT 4.0. A bit later Anthropic shook things up by releasing its large language model called Claude 2 with a 100,000 token context window (about 75,000 words).

Why not bigger context windows? The technology being used for these large language models came at a high cost. Computing costs for larger context windows increased in a quadratic fashion, meaning that if we double the length of the context window, the costs to run the chatbot quadruple. GPT 4.0 already costs millions of dollars to run. Even Microsoft has to blink at the thought of increasing cost in quadratic fashion.

What Do You Send to the Context Window?

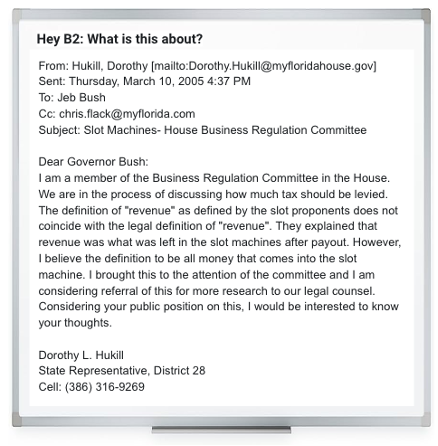

When we use GPT or other large language models for ediscovery, our goal is not to ask GPT general questions that can be answered based on its initial training. Rather, we want its help analyzing and synthesizing information from our (or our client’s) documents. In essence, we want to take advantage of GPT’s brain to provide analysis and insights about our private documents.

The process is simple enough to describe. We simply include the text of one or more documents in our prompt statements and ask GPT to respond based on the information we send. GPT quickly reads the prompt information and bases its answer on the contents provided. It literally reads the documents we send and answers our questions.

All of this happens in the context window, our virtual whiteboard.

Bing uses the same technique to answer questions that go beyond GPT’s training cutoff. When you ask Bing a question, it immediately runs an Internet search to find relevant documents. That information, in the form of four to five relevant articles, is sent to GPT with a request that GPT read the articles and use that information to answer a question. Again, GPT does not and cannot absorb the information it reads in the course of answering your questions.

Does the Context Window Present A Security Concern?

Not really, and certainly not when compared to storing all your email and documents on servers in Azure or AWS.

Why is that? Because information in the context window is transitory. With one exception discussed below, the information you send to the context window is not stored on servers or hard drives. Once your question is answered, the context window is cleared and ready for your next question.

If It’s Transitory, How Does a Large Language Model Remember Our Conversation?

The answer is simple. Behind the scenes, we resend all of the information being analyzed to the large language model with each prompt. You might type in a short follow-up question after receiving a long answer from the large language model. However, behind the scenes, we resend all of the information that preceded your new question.

Could Microsoft Capture Information from the Context Window?

As a technical matter, the answer is yes but that isn’t the end of the discussion. This concern initially arose during OpenAI’s release of ChatGPT for public beta testing last November. In that release, OpenAI reserved the right to capture and save prompt information for further testing and training. As a result, legal professionals had reason to be concerned about sending confidential information to ChatGPT.

The concern remains valid with respect to the free beta program but not when you use GPT through a commercial license and its private API. While a vendor like OpenAI or Microsoft may technically have the ability to capture transitory information from the context window, they contractually agree not to do that for commercial license customers who access the system directly through an API.

Microsoft’s Service Terms: Microsoft offers this statement regarding its OpenAI services:

“Your prompts (inputs) and completions (outputs), your embeddings, and your training data:

- are NOT available to other customers.

- are NOT available to OpenAI.

- are NOT used to improve OpenAI models.

- are NOT used to improve any Microsoft or 3rd party products or services.

- are NOT used for automatically improving Azure OpenAI models for your use in your resource (The models are stateless, unless you explicitly fine-tune models with your training data).

- Your fine-tuned Azure OpenAI models are available exclusively for your use.

The Azure OpenAI Service is fully controlled by Microsoft; Microsoft hosts the OpenAI models in Microsoft’s Azure environment and the Service does NOT interact with any services operated by OpenAI (e.g. ChatGPT, or the OpenAI API).”

Doesn’t Microsoft Reserve the Right to Review Data?

Technically yes, Microsoft’s standard service terms include the right to monitor large language model activities for “abuse.” Here is the Microsoft statement:

“Azure OpenAI abuse monitoring detects and mitigates instances of recurring content and/or behaviors that suggest use of the service in a manner that may violate the code of conduct or other applicable product terms. To detect and mitigate abuse, Azure OpenAI stores all prompts and generated content securely for up to thirty (30) days. (No prompts or completions are stored if the customer is approved for and elects to configure abuse monitoring off, as described below.)”

The provision goes on to state:

“Human reviewers assessing potential abuse can access prompts and completions data only when that data has been flagged by the abuse monitoring system. The human reviewers are authorized Microsoft employees who access the data via point wise queries using request IDs, Secure Access Workstations (SAWs), and Just-In-Time (JIT) request approval granted by team managers.”

In our view, these provisions do not negate a client’s “reasonable expectation of privacy” because the purpose of monitoring is to address abusive use of the large language model. We discuss the contours of reasonable expectation of privacy in more detail below.

Opting Out of Abuse Review: Even if Microsoft’s reservation of rights did jeopardize the privilege, there is an easy solution. For private sites like those used for legal purposes, a client can ask Microsoft to make an exception to its abuse policy. If the request is approved, Microsoft will not allow its employees to review prompts for abuse.

Here is Microsoft’s statement:

“If Microsoft approves a customer’s request to modify abuse monitoring, then Microsoft does not store any prompts and completions associated with the approved Azure subscription for which abuse monitoring is configured off. In this case, because no prompts and completions are stored at rest in the Service Results Store, the human review process is not possible and is not performed.”

OpenAI’s Reservation for Abuse Monitoring: OpenAI similarly promises not to review communications between the user (prompt) and the system’s response. Here, for example, is the controlling provision from OpenAI’s service agreement:

“OpenAI terms: We do not use Content that you provide to or receive from our API (“API Content”) to develop or improve our Services. We may use Content from Services other than our API (“Non-API Content”) to help develop and improve our Services.”

API stands for application programming interface. It is a software intermediary that allows two programs to talk to each other without human involvement. In essence, it allows a system you create to speak directly with GPT.

Like Microsoft, OpenAI does reserve the right to inspect and monitor communications that violate its abuse policy:

“OpenAI retains API data for 30 days for abuse and misuse monitoring purposes. A limited number of authorized OpenAI employees, as well as specialized third-party contractors that are subject to confidentiality and security obligations, can access this data solely to investigate and verify suspected abuse. OpenAI may still have content classifiers flag when data is suspected to contain platform abuse.”

Also like Microsoft, OpenAI OpenAI offers the option to seek a waiver from abuse monitoring for companies operating under a commercial license.

As we mentioned earlier, we don’t believe that monitoring provisions like these will cause a waiver of privilege or confidentiality but you may want to consider using Microsoft’s offering if that is a concern.

What About Using ChatGPT?

Much of the concern over LLMs and confidentiality stemmed from OpenAI’s initial release of ChatGPT as a free public beta. At the time, OpenAI warned users that it reserved the right to review prompt information both to improve the system and for abuse monitoring. These were the reservations that rightly caused concern in legal circles about preserving attorney-client privileges and client confidentiality.

Since its release, OpenAI has introduced new controls for ChatGPT users that allow them to turn off chat history, simultaneously opting out of providing that conversation history as data for training AI models. However, as discussed above, OpenAI did state that even unsaved chats would be retained for 30 days for “abuse monitoring” before permanent deletion.

More recently, OpenAI launched ChatGPT Enterprise which, according to OpenAI, offers enterprise-grade security and privacy, along with improved access to GPT-4. Specifically, OpenAI makes these promises regarding enterprise accounts:

- We do not train on your business data, and our models don’t learn from your usage

- You own your inputs and outputs (where allowed by law)

- You control how long your data is retained (ChatGPT Enterprise)

Open AI goes further to state that authorized OpenAI employees will only ever access your data for the purposes of resolving incidents, recovering end user conversations with your explicit permission, or where required by applicable law.

Thus, if your organization has concerns about employees using ChatGPT for business purposes, consider taking an enterprise license for this purpose.

Read Your License

Ultimately, legal professionals have a responsibility to make sure that third-party providers are reputable and will preserve the confidentiality of any data that passes through their systems. When using a large language model, you should ensure that your agreement contains suitable non-disclosure and data protection provisions, just like you would with an email service or Internet-based document repository. Cross the T’s and dot those I’s and you should have no issue showing a reasonable expectation of privacy regarding your work.

Can We Trust Companies Like Microsoft to Protect Confidential Data?

Each of us has to make that decision and some may say no. However, we bet that the great majority of legal professionals have already answered yes to this question and have done so for good reason. Not only can we trust companies like Microsoft to act responsibly, the failure by a rogue employee wouldn’t cause a waiver because it wouldn’t negate our reasonable expectation that the data would be kept confidential.

Think of it this way. For decades we have been handling confidential and privileged information to people driving UPS and FedEx trucks without hesitation. Could they access our data if they wanted to? Of course, just pull the zip tab on the envelope or package.

If a rogue employee did so would privilege be waived? Not likely because you have a reasonable expectation that this would not happen.

What Do the Courts Say?

So, what is all this talk about “reasonable expectation of privacy?” It is a key requirement for protection under the attorney-client privilege. In brief, the elements for privilege are these:

Communications between a lawyer and client for the purposes of obtaining legal advice are subject to attorney-client privilege so long as the communications are made in private and are not shared with third parties who are outside the scope of the privileged communications.

See, e.g. Wikipedia on Attorney-Client Privilege for more on this topic.

As we entered the digital age, people began asking the question: “Would I risk confidentiality or a privilege waiver to use email or even a cell phone to communicate with my clients?” The question arose because these service providers were certainly third parties, outside the scope of attorney-client privilege. And, at least in theory, they had access to the bits and bytes running through their systems, much as Microsoft does for the materials stored in Office 365.

In addressing analogous concerns, courts have taken the position that unencrypted email communications, even on a company server, do not result in a waiver of privilege so long as the person sending the communication had a “reasonable expectation of privacy.” E.g. Twitter, Inc. v. Musk, C. A. 2022-0613-KSJM (Del. Ch. Sep. 13, 2022) (Musk used Tesla/SpacEx email servers for Twitter-related legal communications); Stengart v. Loving Care Agency, Inc., 990 A.2d 650 (2010) (personal legal communications made on work server). It did so notwithstanding the fact that Tesla and SpacEx explicitly reserved the right to inspect company emails for any purpose (including, presumably, abuse of the email privileges).

Over the years, the Standing Committee on Ethics and Professional Responsibility for the American Bar Association has repeatedly affirmed that email communications did not waive the privilege so long as the communicator had a “reasonable expectation of privacy” in the communication. ABA Comm. on Ethics & Prof’l Responsibility, Formal Op. 17-477 (leaving an open question about message boards and cell phone use).

The same undoubtedly holds true for the use of web hosting services like those offered by Microsoft, Google or AWS as well as litigation support providers. All of these companies have access to your data at one point or another but all are under contractual obligations not to exercise that privilege except to protect their systems from abuse or misuse.

Should I Be Nervous?

In the early 1900s as technicians were stringing telephone wires across New York City, some members of the bar questioned whether the use of telephones might violate attorney-client privilege. Without summoning up an old debate, the answer was no, it did not.

The same questions were raised about the Internet and the use of email for communications, with many claiming that sending an email was akin to putting your advice on a billboard. Lawyers also made the argument as people began using cellphones and when we considered moving to Microsoft Office 365.

It is natural to be concerned about using new technology when it is your obligation to protect client confidentiality or your legal advice to a client. Should we eschew the obvious benefits of an advanced AI tool like GPT for fear that we might be waiving privilege?

Our answer is no. There is nothing about using GPT that would negate our reasonable expectation of privacy, which is the cornerstone of the attorney-client and work-product privileges.

About the Authors

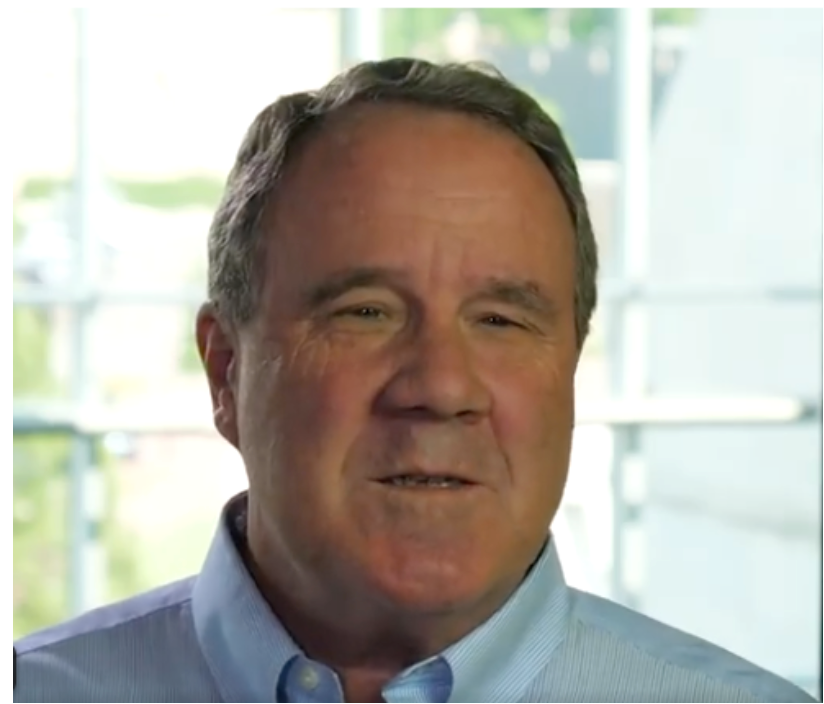

John Tredennick (JT@Merlin.Tech) is the CEO and founder of Merlin Search Technologies, a software company leveraging generative AI and cloud technologies to make investigation and discovery workflow faster, easier and less expensive. Prior to founding Merlin, Tredennick had a distinguished career as a trial lawyer and litigation partner at a national law firm.

With his expertise in legal technology, he founded Catalyst in 2000, an international ediscovery technology company that was acquired in 2019 by a large public company. Tredennick regularly speaks and writes on legal technology and AI topics, and has authored eight books and dozens of articles. He has also served as Chair of the ABA’s Law Practice Management Section.

Dr. William Webber (wwebber@Merlin.Tech) is the Chief Data Scientist of Merlin Search Technologies. He completed his PhD in Measurement in Information Retrieval Evaluation at the University of Melbourne under Professors Alistair Moffat and Justin Zobel, and his post-doctoral research at the E-Discovery Lab of the University of Maryland under Professor Doug Oard.

With over 30 peer-reviewed scientific publications in the areas of information retrieval, statistical evaluation, and machine learning, he is a world expert in AI and statistical measurement for information retrieval and ediscovery. He has almost a decade of industry experience as a consulting data scientist to ediscovery software vendors, service providers, and law firms.

About Merlin Search Technologies

Merlin is a pioneering cloud technology company leveraging generative AI and cloud technologies to re-engineer legal investigation and discovery workflows. Our next-generation platform integrates GenAI and machine learning to make the process faster, easier and less expensive. We’ve also introduced Cloud Utility Pricing, an innovative software hosting model that charges by the hour instead of by the month, saving clients substantial savings on discovery costs when they turn off their sites.

With over twenty years of experience, our team has built and hosted discovery platforms for many of the largest corporations and law firms in the world. Learn more at merlin.tech.

John Tredennick, CEO and founder of Merlin Search Technologies

JT@Merlin.Tech

Dr. William Webber, Merlin Chief Data Scientist

WWebber@Merlin.Tech

Transforming Discovery with GenAI

Take a look at our research and generative AI platform integration work on our GenAI page.

Subscribe

Get the latest news and insights delivered straight to your inbox!